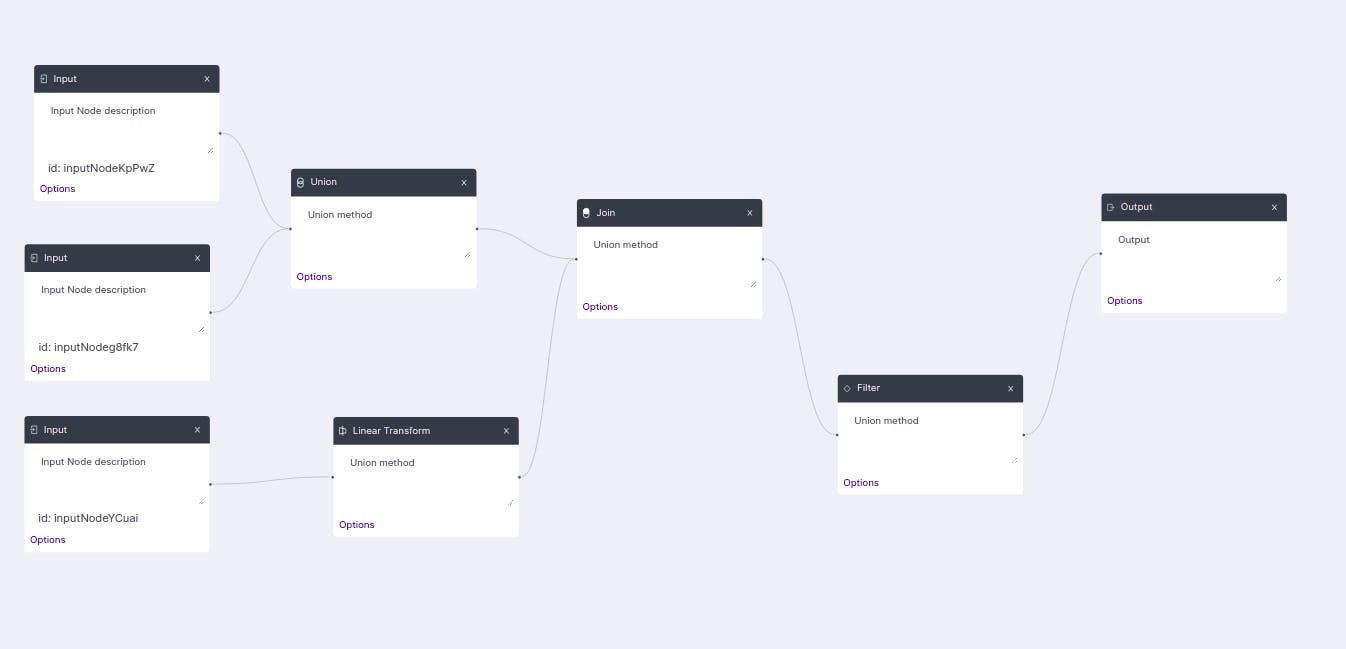

The project was to create a pipeline or a flowchart in the frontend and send the flowchart information to the backend for further processing. We used reactflow package to create a flow diagram by dragging and dropping nodes to the canvas in the frontend. The backend team had their own sets of protocols defined for describing the node types and their related information. And frontend team created their own data. This difference in data consent was probably because of the gap in communication and also the whole scope of the project was somehow blurry to both teams. Though, Independently it seemed that the backend was working fine and so was the frontend, however, what remained was the communication between the teams.

Suppose the backend was expecting JSON data something like this:

{"jpt_pipeline_data": [

{

"id":"124",

"plugin_type": "input",

"alias": "source1",

"plugin_id": "hello123",

"plugin_config": {

"type": "dynamic",

"dynamic_elements": ["source_uri"],

"header_exists": True,

"deliminator": ",",

"headers": ["ISIN", "InstrTypeGrp", "InstrType",

"CompShortName", "VotingShares", "OShs",

"Name", "ExchangeName", "Market", "U-GKey",

"Val", "NetVal"],

"persist": True,

}

},

This seemed alright at first glance. But depending on the plugin_type and plugin_id there could be numerous variations of data on plugin_config. Also, initially, the backend was developed with REST API, and later as the office was slowly adopting graphQL in all other projects the backend team reluctantly switched the APIs to graphQL. This change posed a lot of problems while generating a schema for this type of JSON data. For REST APIs where the schema isn't defined for data, this wasn't an issue and was working well. Since the first sprint was coming to an end, taking some extra time to create a better schema wasn't acceptable (despite my efforts to suggest otherwise ), so they ended up accepting the stringified version of the JSON data from the frontend. Shipped is always better than perfect, right?

There were possibilities of a lot of lines coming in and out through nodes designed in the canvas. The flowchart could be more complex as the system grew, so it could take some time for the user of the system to create a desired pipeline in the canvas. Therefore, it would make sense to keep updating the backend about intermediate changes on the canvas. But sadly enough, intermediate updating was out of the scope of the first sprint. So, all the data of the pipeline designed by the user on the canvas would be sent to the backend in bulk once the user clicks a "save" button. Also, it was sort of promised by the backend team that intermediate updating would be a feature in the next sprint.

The programming part of the canvas was abstracted beautifully by reactflow. So the frontend team, besides some basic node designs and CRUD operations, had some time to be ready for the backend to support intermediate updating. Besides, it would break the frontend architecture a lot if the team sent bulk data in the first sprint and made changes to send intermediate updates to the backend. So, we decided to create our own middleware in the frontend which would basically act as the intermediate backend keeping track of all the changes of the canvas. The middleware would basically function as a state tracker of the canvas and perform data translation from backend response to frontend compatible data and frontend data to backend compatible request.

The pipeline designer page had a lot of components & children within itself. We needed to track the state of the designer so we needed a state management library. We discussed, at length, better packages like redux with redux-toolkit, jotai, zustand etc. There was a discussion on the use of context API as well. Passing down states with context API sounded performance heavy as with every update all the components subscribing to the context would re-render. But then we realized we just needed to keep track of the state, we didn't actually need to render anything on the screen. So we could simply not use react state and rather use some sort of persisting data that we could retrieve once the user clicks the save button. By persisting data, I mean the data that wouldn't be destroyed on subsequent renders like react state but without triggering any UI re-rendering if the data is updated unlike react state. This seemed like a perfect use case of useRef.

So, we created a useMiddleware hook composing the middleware ref to track the states and functions to update and manipulate the state.

function useMiddleWare() {

const nodeRef = useRef<NodeDataType | null>(null);

// add the initial state of the node

function addNode(data: IAddNodeProps) {

const { id, type, position } = data;

if (!nodeRef.current) {

nodeRef.current = new Map();

}

nodeRef.current.set(id, { id, type, position, connector: { forward: null, backward: null } });

return data;

}

function removeNode(nodeId:ID){

// remove node here

}

function moveNode(nodeId:ID,position:INodePosition){

// update the position of node

}

function convertToFrontendCompatibleFormat(backendData:( (IProcessedSource | IProcessedSink |

IProcessedTransform )[]){

// convert to frontend compatible format

}

//... so on

}

Once the useMiddleware hook was created which simply has a middleware ref which gets manipulated by several functions within. The middleware is then passed along to all the children components using context API.

// some imports and type definitions

//.....

// creating context

const ContextWrapper = React.createContext<ContextWrapperInterface | null>(null);

// creating provider and passing the middleware value to the context provider

function PipelineContextProvider({ children}:contextProps) {

const middleware = useMiddleWare();

const context: ContextWrapperInterface = {

middleware,

};

return <ContextWrapper.Provider value={context}>{children}</ContextWrapper.Provider>;

};

// creating a consumer hook with the context API with useContext hook

function usePipelineContext() {

const context = React.useContext(ContextWrapper);

if (context === undefined) {

throw new Error(

'useNewOrderContext should be used in components which are wrapped by NewOrderContextProvider'

);

}

if (!context) throw new Error('the context data cannot be null');

return context;

}

Now once the context provider and its hook for consuming the context value is created the canvas page was wholly wrapped by the context provider so that all the children components can have access to the value that the context provides which in our case is the middleware ref.

function Pipeline(){

return(

<PipelineContextProvider>

... other components

</PipelineContextProvider>

)

}

function TransformNode(){

const middleware = usePipelineContext();

// use the middleware functions like middleware.addNode() wherever required

return (

<div>

</div>

)

}

Yes, it seems like a daunting task for the frontend team to create a middleware using context API and preserving its value with ref just because the backend team got a lot of tasks on hand. And even though after the backend teams successfully release the intermediate updating feature, the middleware would still function actively as a state tracker on the frontend portion and as a data manipulator/translator. Also, The developer handling the design of canvas using reactFlow needn't worry about the data interacting with the backend as an abstraction of the data flow would be managed entirely by the middleware.